Welcome to My Homepage!

I’m a partner at BeingBeyond, a startup dedicated to advancing foundation models for general-purposed humanoid robots, where I collaborate closely Prof. Zongqing Lu. Now I am leading the Embodied Multimodal Pretraining team in BeingBeyond, with projects including Being-H, Being-M and Being-VL series. Prior to this, I was a researcher at the Beijing Academy of Artificial Intelligence (BAAI). I obtained my PhD and bachelor’s degree from Renmin University of China (RUC), under the guidance of Prof. Qin Jin. My research primarily focuses on human behavior understanding, vision-and-language learning, and the development of open-world embodied agents. Currently I’m working towards an intelligent humanoid robot. For more details, please refer to my CV.

Join Us!

We are actively recruiting full-time researchers and interns to join our team. If you’re passionate about embodied AI, feel free to reach out.

Our research blogs: https://research.beingbeyond.com/

Research Interest

- Large large multimodal models

- Human behavior and motion understanding

- Vision-language-action models

- Humanoid Robots

🔥 News

- 2026.01: ⭐ We release Being-H0.5, our latest, cross-embodiment foundation VLA.

- 2025.09: 🎉 Two paper is accepted to Neurips’25.

- 2025.08: ⭐ We present our Being-M0.5, an improved version of its Being-M0 with real-time controllability.

- 2025.07: ⭐ Our next version of LMM Being-VL-0.5 is released, including code and checkpoints.

- 2025.07: ⭐ We release Being-H0, the first VLA pretrained from large-scale human videos with hand motion!

- 2025.06: 🎉 Three paper is accepted to ICCV’25.

- 2025.06: 🏆 We won 1st place in GemBench Challenge at CVPR 2025 Workshop GRAIL.

- 2025.05: ⭐ We present our first million-level motion model Being-M0, which is accepted by ICML 2025.

- 2024.10: ⭐ We present our Being-VL-0, which is accepted by ICLR 2025.

📝 Publications

* denotes equal contribution, † denotes project lead, ✉ denotes corresponding author

BeingBeyond Series

BeingBeyond Series

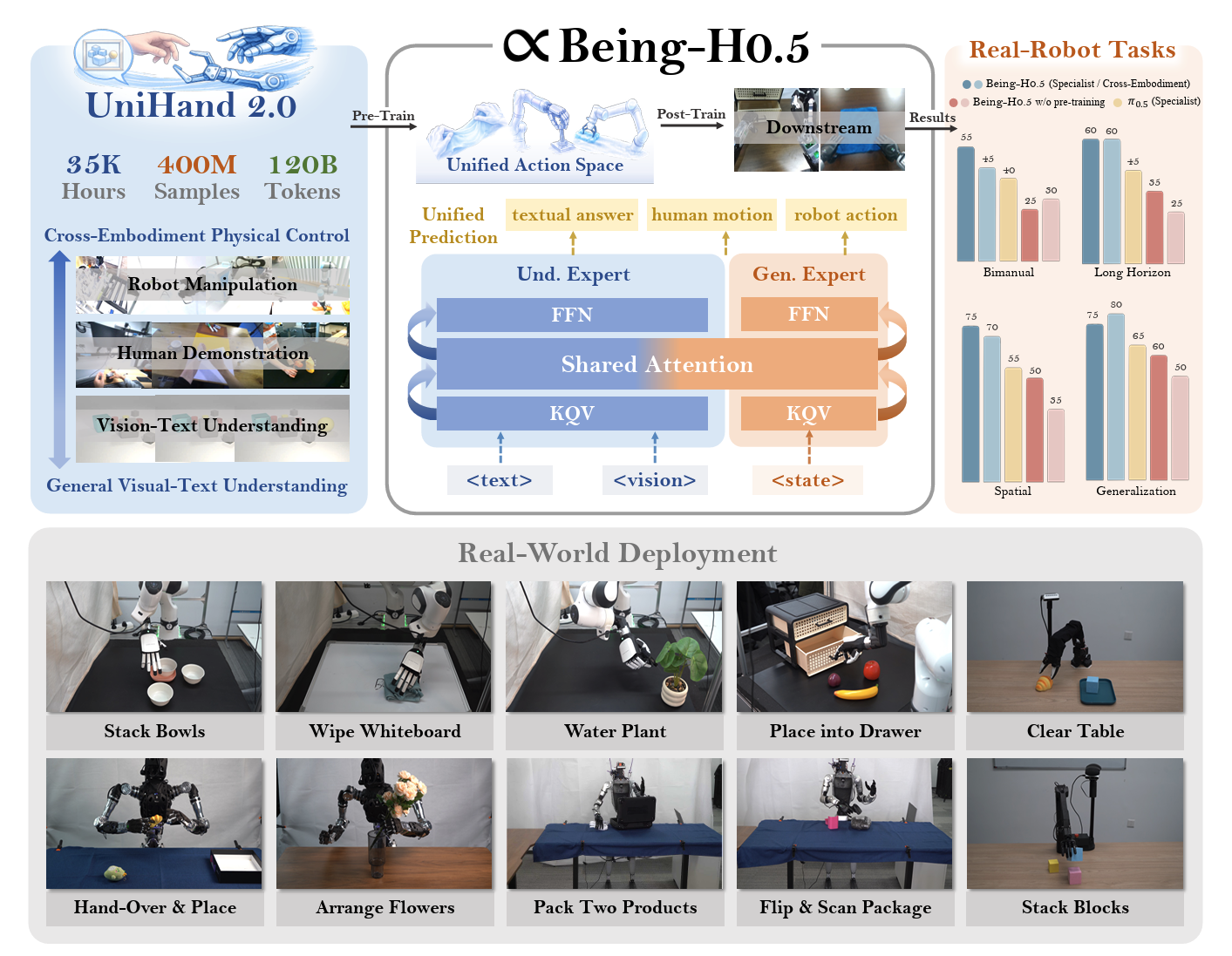

Being-H0.5: Scaling Human-Centric Robot Learning for Cross-Embodiment Generalization

Hao Luo*, Ye Wang*, Wanpeng Zhang*, Sipeng Zheng*†, Ziheng Xi, Chaoyi Xu, Haiweng Xu, Haoqi Yuan, Chi Zhang, Yiqing Wang, Yicheng Feng, Zongqing Lu✉

- Robots do not just look different. They also act through different physical control languages: different kinematics, sensors, action conventions, and timing. Being-H0.5 is our attempt to make one Vision-Language-Action model travel across those differences without turning into a brittle collection of per-robot hacks. The model is trained using over 35,000 hours of data, including 16,000 hours of human videos and 14,000 hours of robot manipualtion (30+ embodiments).

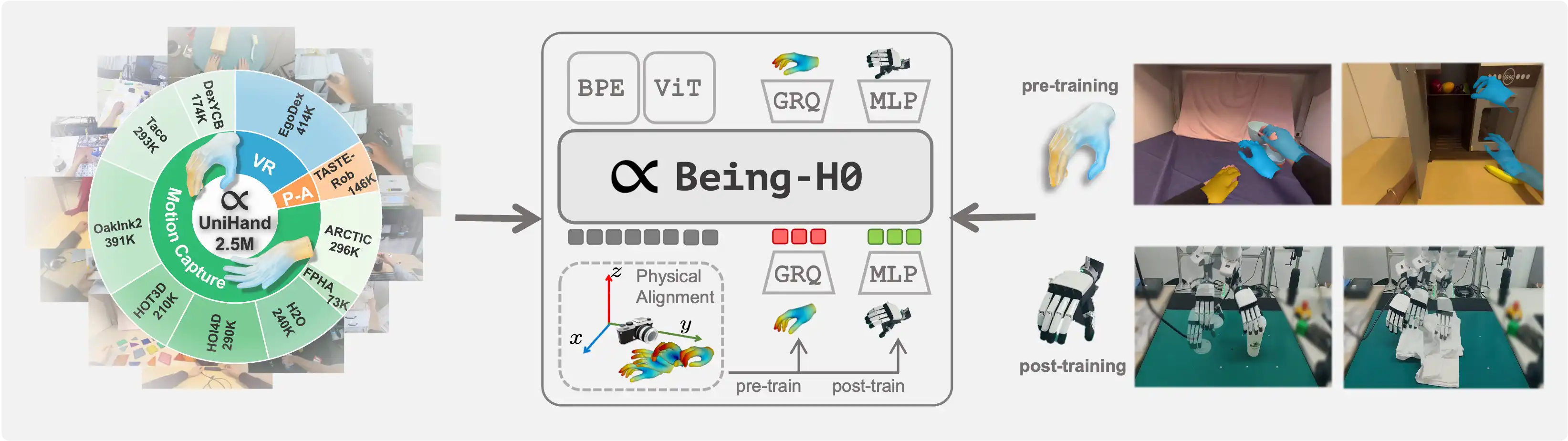

Being-H0: Vision-Language-Action Pretraining from Large-Scale Human Videos

Hao Luo*, Yicheng Feng*, Wanpeng Zhang*, Sipeng Zheng*†, Ye Wang, Haoqi Yuan, Jiazheng Liu, Chaoyi Xu, Qin Jin, Zongqing Lu✉

- Being-H0 is the first VLA pretrained from large-scale human videos with hand motion.

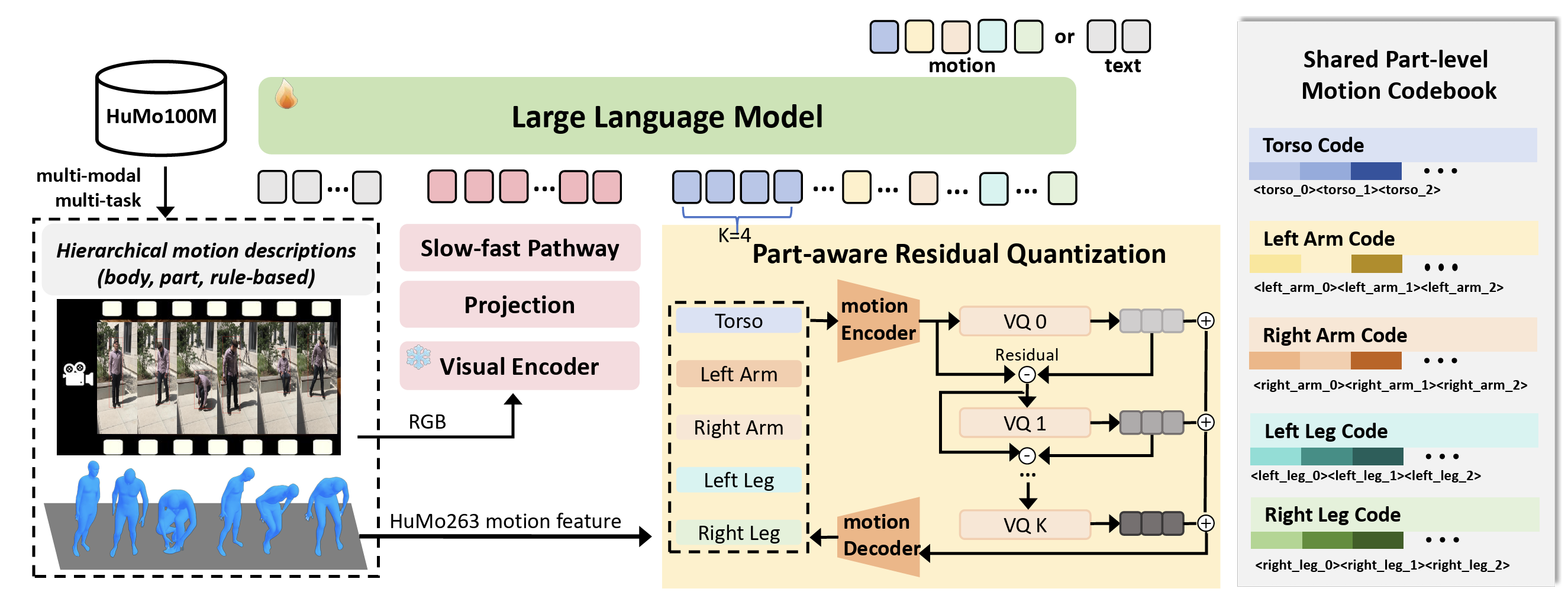

Being-M0.5: A Real-Time Controllable Vision-Language-Motion Model

Bin Cao*, Sipeng Zheng*, Ye Wang, Lujie Xia, Qianshan Wei, Qin Jin, Jing Liu, Zongqing Lu✉

ICCV25

- Being-M is the first large motion generation model scaling to million-level motion sequences.

Being-M0: Scaling Large Motion Models with Million-Level Human Motions (ICML 2025)

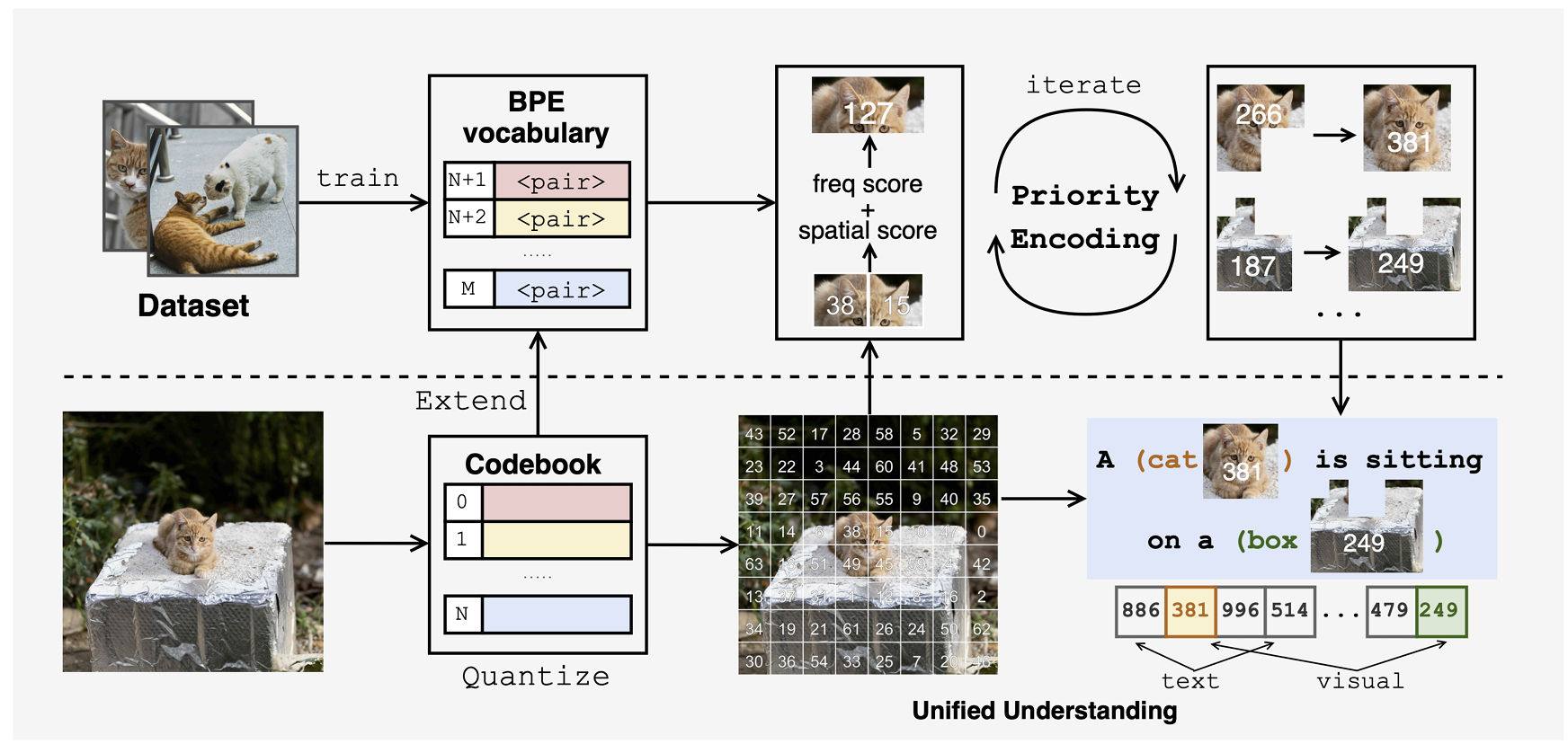

Being-VL0.5: Unified Multimodal Understanding via Byte-Pair Visual Encoding

Wanpeng Zhang, Yicheng Feng, Hao Luo, Yijiang Li, Zihao Yue, Sipeng Zheng, Zongqing Lu✉

ICCV25 (Highlight)

- Being-VL is the first large multimodal model based on compressed discrete visual representation using 2D-BPE.

Being-VL-0: From Pixels to Tokens: Byte-Pair Encoding on Quantized Visual Modalities (ICLR 2025)

🎙 Before BeingBeyond

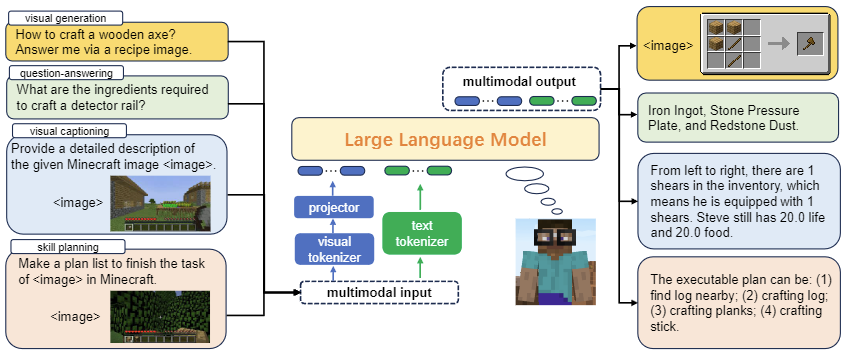

Steve-Eye: Equipping LLM-based Embodied Agents with Visual Perception in Open Worlds, Sipeng Zheng, Jiazheng Liu, Yicheng Feng, Zongqing Lu✉

ICLR24 (Spotlight 5.02%)

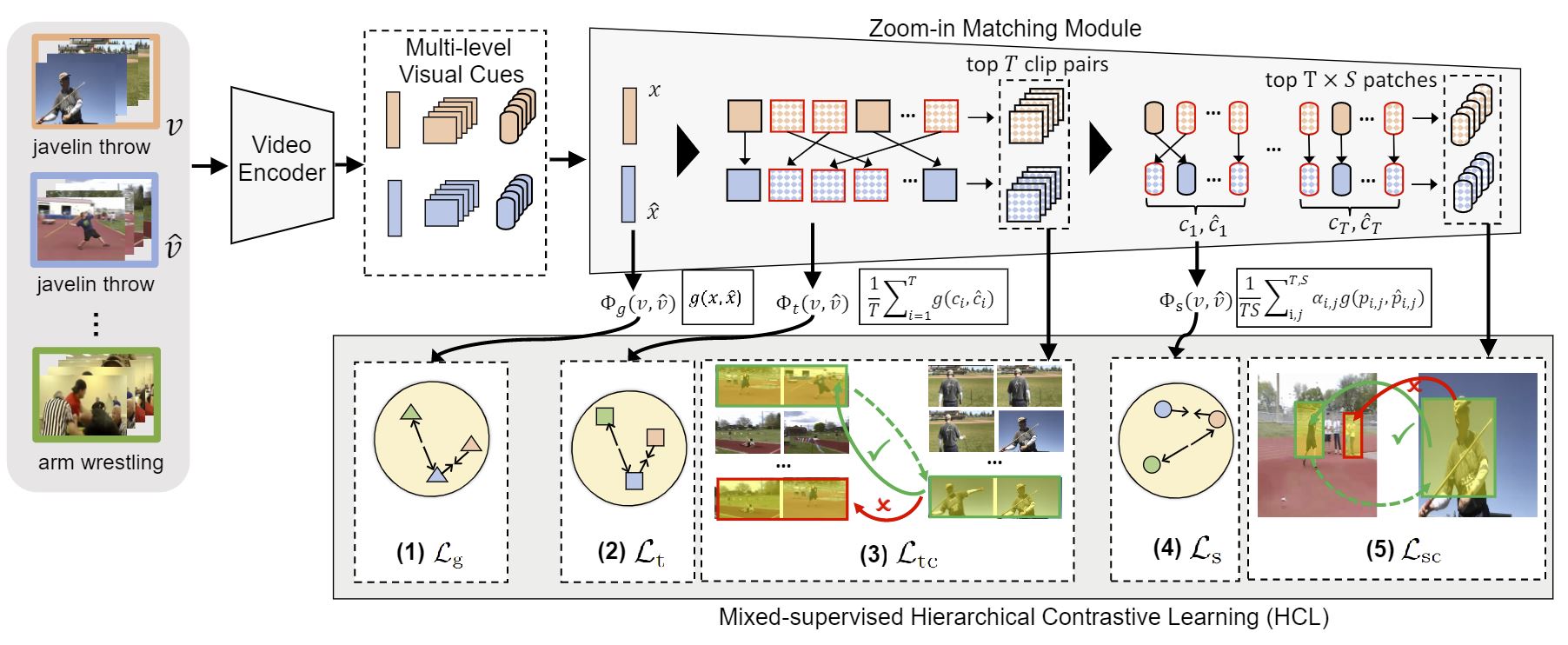

Few-shot Action Recognition with Hierarchical Matching and Contrastive Learning, Sipeng Zheng, Shizhe Chen, Qin Jin✉

ECCV22

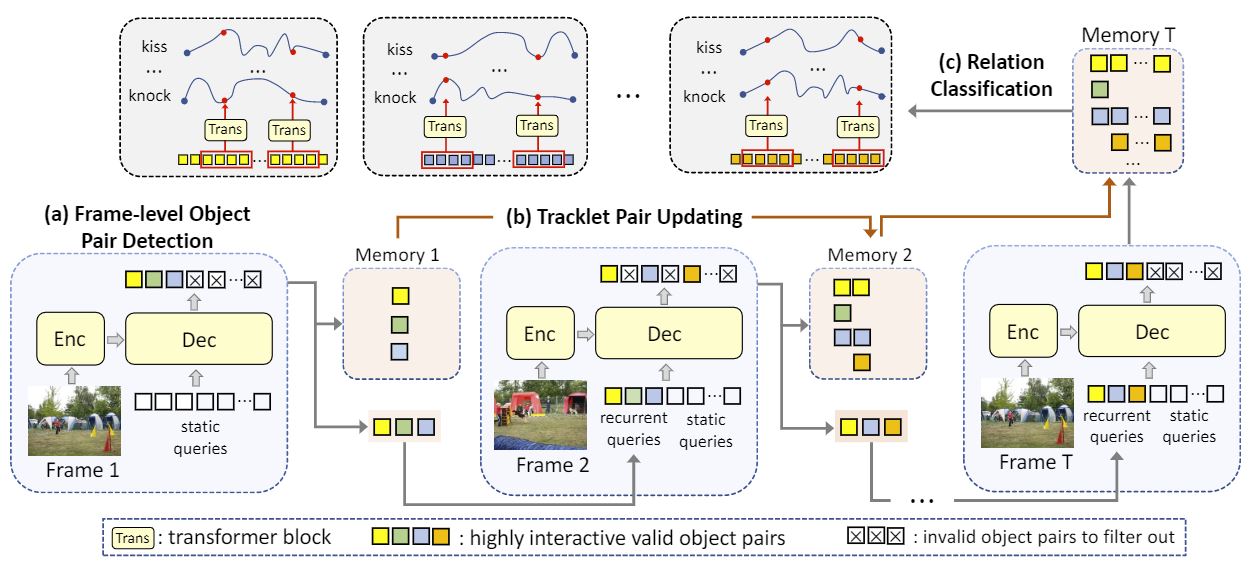

VRDFormer: End-to-end video visual relation detection with transformer, Sipeng Zheng, Shizhe Chen, Qin Jin✉

CVPR22 (Oral 4.14%)

📚 Paper List

-

Arxiv 2026Being-H0.5: Scaling Human-Centric Robot Learning for Cross-Embodiment Generalization, Hao Luo*, Ye Wang*, Wanpeng Zhang*, Sipeng Zheng*†, Ziheng Xi, Chaoyi Xu, Haiweng Xu, Haoqi Yuan, Chi Zhang, Yiqing Wang, Yicheng Feng, Zongqing Lu✉, Blog | Code |Model.

-

Arxiv 2025Predictive Embedding as Latent Action: Towards VLA Pretraining in the Wild, Hao Luo, Ye Wang, Wanpeng Zhang, Haoqi Yuan, Yicheng Feng, Haiweng Xu, Sipeng Zheng, Zongqing Lu✉, -

Arxiv 2025DiG-Flow: Discrepancy-Guided Flow Matching for Robust VLA Models, Wanpeng Zhang, Ye Wang, Hao Luo, Haoqi Yuan, Yicheng Feng, Sipeng Zheng, Qin Jin, Zongqing Lu✉, Blog | Code. -

Arxiv 2025Robust Motion Generation using Part-level Reliable Data from Videos, Boyuan Li, Sipeng Zheng, Bin Cao, Ruihua Song, Zongqing Lu. -

Arxiv 2025OpenT2M: No-frill Motion Generation with Open-source, Large-scale, High-quality Data, Bin Cao, Sipeng Zheng, Hao Luo, Boyuan Li, Jing Liu, Zongqing Lu. -

Arxiv 2025Spatial-Aware VLA Pretraining through Visual-Physical Alignment from Human Videos, Yicheng Feng, Wanpeng Zhang, Ye Wang, Hao Luo, Haoqi Yuan, Sipeng Zheng, Zongqing Lu✉, Page | Project. -

Arxiv 2025Being-H0: Vision-Language-Action Pretraining from Large-Scale Human Videos, Hao Luo*, Yicheng Feng*, Wanpeng Zhang*, Sipeng Zheng*†, Ye Wang, Haoqi Yuan, Jiazheng Liu, Chaoyi Xu, Qin Jin, Zongqing Lu✉, Blog | Code |Model.

-

Arxiv 2025RL from Physical Feedback: Aligning Large Motion Models with Humanoid Control, Junpeng Yue, Zepeng Wang, Yuxuan Wang, Weishuai Zeng, Jiangxing Wang, Xinrun Xu, Yu Zhang, Sipeng Zheng, Ziluo Ding, Zongqing Lu✉, Page. -

NeurIPS 2025OpenMMEgo: Enhancing Egocentric Understanding for LMMs with Open Weights and Data, Hao Luo, Zihao Yue, Wanpeng Zhang, Yicheng Feng, Sipeng Zheng, Deheng Ye, Zongqing Lu. Boshen Xu, Yuting Mei, Xinbi Liu, Sipeng Zheng, Jin Qin✉. -

NeurIPS 2025EgoDTM: Towards 3D-Aware Egocentric Video-Language Pretraining, Boshen Xu, Yuting Mei, Xinbi Liu, Sipeng Zheng, Jin Qin✉, Code. -

EMNLP 2025Taking Notes Brings Focus? Towards Multi-Turn Multimodal Dialogue Learning, Jiazheng Liu, Sipeng Zheng, Börje F Karlsson, Zongqing Lu✉. -

ICCV 2025Unified Multimodal Understanding via Byte-Pair Visual Encoding, Wanpeng Zhang, Yicheng Feng, Hao Luo, Yijiang Li, Zihao Yue, Sipeng Zheng, Zongqing Lu✉, Blog | Code | Page. -

ICCV 2025MotionCtrl: A Real-time Controllable Vision-Language-Motion Model, Bin Cao*, Sipeng Zheng*, Ye Wang, Lujie Xia, Qianshan Wei, Qin Jin, Jing Liu, Zongqing Lu✉. -

ICCV 2025VideoOrion: Tokenizing Object Dynamics in Videos, Yicheng Feng*, Yijiang Li*, Wanpeng Zhang, Sipeng Zheng, Zongqing Lu✉. -

Arxiv 2025QuadrupedGPT: Towards a Versatile Quadruped Agent in Open-ended Worlds, Yuting Mei*, Ye Wang*, Sipeng Zheng, Qin Jin✉, Page. -

ICML 2025Scaling Large Motion Models with Million-Level Human Motions, Ye Wang*, Sipeng Zheng*, Bin Cao, Qianshan Wei, Weishuai Zeng, Qin Jin, Zongqing Lu✉, Page. -

ICLR 2025EgoNCE++: Do Egocentric Video-Language Models Really Understand Hand-Object Interactions?, Boshen Xu, Ziheng Wang, Yang Du, Zhinan Song, Sipeng Zheng, Qin Jin✉, Code. -

ICLR 2025From Pixels to Tokens: Byte-Pair Encoding on Quantized Visual Modalities, Wanpeng Zhang, Zilong Xie, Yicheng Feng, Yijiang Li, Xingrun Xing, Sipeng Zheng, Zongqing Lu✉, Page. -

3DV 2025SPAFormer: Sequential 3D Part Assembly with Transformers, Boshen Xu, Sipeng Zheng, Qin Jin✉, Code. -

ECCV 2024UniCode: Learning a Unified Codebook for Multimodal Large Language Models, Sipeng Zheng, Bohan Zhou, Yicheng Feng, Ye Wang, Zongqing Lu✉. -

ICLR 2024Steve-Eye: Equipping LLM-based Embodied Agents with Visual Perception in Open Worlds, Sipeng Zheng, Jiazheng Liu, Yicheng Feng, Zongqing Lu✉, Page. -

NAACL 2024LLaMA Rider: Spurring Large Language Models to Explore the Open World, Yicheng Feng, Yuxuan Wang, Jiazheng Liu, Sipeng Zheng, Zongqing Lu✉, Page. -

ACM-MM 2023POV: Prompt-Oriented View-agnostic Learning for Egocentric Hand-Object Interaction in the Multi-view World, Boshen Xu, Sipeng Zheng, Qin Jin✉, Page | Code. -

AAAI 2023No-frills Temporal Video Grounding: Multi-Scale Neighboring Attention and Zoom-in Boundary Detection, Qi Zhang, Sipeng Zheng, Qin Jin✉, Code. -

CVPR 2023Open-Category Human-Object Interaction Pre-Training via Language Modeling Framework, Sipeng Zheng, Boshen Xu, Qin Jin✉. -

AAAI 2023Accommodating audio modality in CLIP for multimodal processing, Ludan Ruan, Anwen Hu, Yuqing Song, Lliang Zhang, Sipeng Zheng, Qin Jin✉. -

IEEC 2023Anchor-Based Detection for Natural Language Localization in Ego-Centric Videos, Sipeng Zheng, Bei Liu, Jianlong Fu, Wen-Huang Cheng✉, Code. -

ECCV 2022Few-shot Action Recognition with Hierarchical Matching and Contrastive Learning, Sipeng Zheng, Shizhen Chen, Qin Jin✉, Code. -

CVPR 2022VRDFormer: End-to-end video visual relation detection with transformer, Sipeng Zheng, Shizhe Chen, Qin Jin✉, Code. -

CVPR 2022 workshopExploring anchor-based detection for ego4d natural language query, Sipeng Zheng, Qi Zhang, Bei Liu, Qin Jin✉, Jianlong Fu, , Code. -

ICME 2020Skeleton-based interactive graph network for human object interaction detection, Sipeng Zheng, Shizhe Chen, Qin Jin✉, , Code. -

ACM-MM 2019Visual relation detection with multi-level attention, Sipeng Zheng, Shizhe Chen, Qin Jin✉. -

ACM-MM 2019Relation understanding in videos, Sipeng Zheng, Xiangyu Chen, Shizhe Chen, Qin Jin✉.

🎖 Honors and Awards

- 2025 Ranked 1st in GemBench Challenge at CVPR 2025 Workshop GRAIL.

- 2022 Ranked 3th in CVPR 2022 Ego4D Natural Language Query Challenge.

- 2021 Ranked 3th in NIST TRECVID 2021 Ad-hoc Video Search (AVS) Challenge.

- 2021 Ranked 2nd in CVPR 2021 HOMAGE Scene-graph Generation Challenge.

- 2020 Ranked 2nd in ACM MM 2020 Video Relationship Understanding Grand Challenge.

- 2019 Ranked 2nd in ACM MM 2019 Video Relationship Understanding Grand Challenge.

- 2022 National Scholarship for Ph.D Students.

- 2019 Best Method Prize in ACM MM 2019 Grand Challenge.

- 2019 First Class Scholarship for Ph.D Students from 2018 to 2021.

- 2015 First Prize in National University Mathematical Modeling Competition of Beijing Area.

📖 Educations

- 2018.09 - 2023.06, PhD, Computer Science and Engineering, Renmin University of China, China.

- 2014.09 - 2018.06, Undergraduate; Computer Science and Engineering, Renmin University of China, China.

💻 Work Experience

- 2025.05 - now, Research Scientist; BeingBeyond, Beijing, China.

- 2023.07 - 2025.05, Researcher; Beijing Academy of Artificial Intelligence, Beijing, China.

- 2022.04 - 2022.10, Research Intern; Microsoft Research Asia, Beijing, China.

- 2021.11 - 2022.04, Research Intern; Beijing Academy of Artificial Intelligence, Beijing, China.

🔧 Services

- Conference Reviewer for CVPR, ICCV, ECCV, ACCV, NeurIPS, AAAI, ACM MM.

- Journal Reviewer for IJCV, TCSVT, TMM, JATS.