Exploring anchor-based detection for ego4d natural language query

Published in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop 2022, 2022

Recommended citation: S Zheng, Q Zhang, B Liu, Q Jin, J Fu. "Exploring anchor-based detection for ego4d natural language query." 2022 Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshop. https://arxiv.org/abs/2208.05375

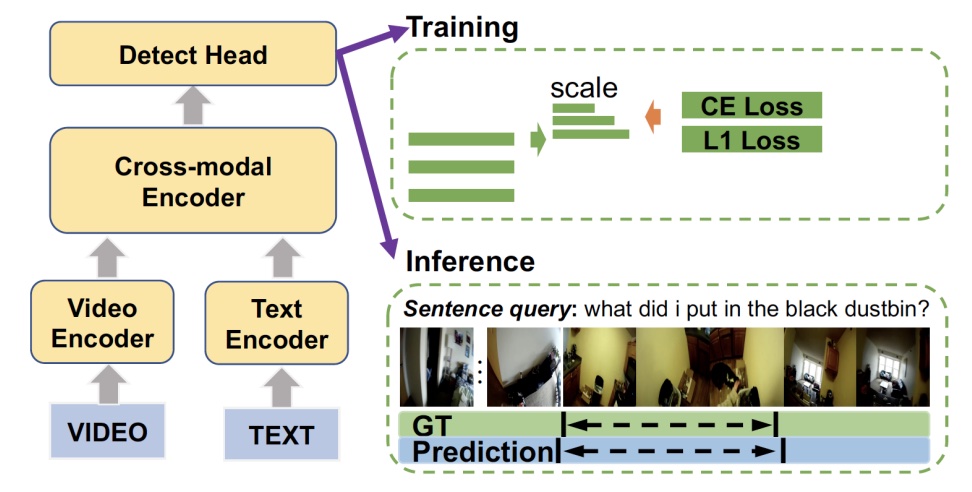

In this paper we provide the technique report of Ego4D natural language query challenge in CVPR 2022. Natural language query task is challenging due to the requirement of comprehensive understanding of video contents. Most previous works address this task based on third-person view datasets while few research interest has been placed in the ego-centric view by far. Great progress has been made though, we notice that previous works can not adapt well to ego-centric view datasets e.g., Ego4D mainly because of two reasons: 1) most queries in Ego4D have a excessively small temporal duration (e.g., less than 5 seconds); 2) queries in Ego4D are faced with much more complex video understanding of long-term temporal orders. Considering these, we propose our solution of this challenge to solve the above issues.