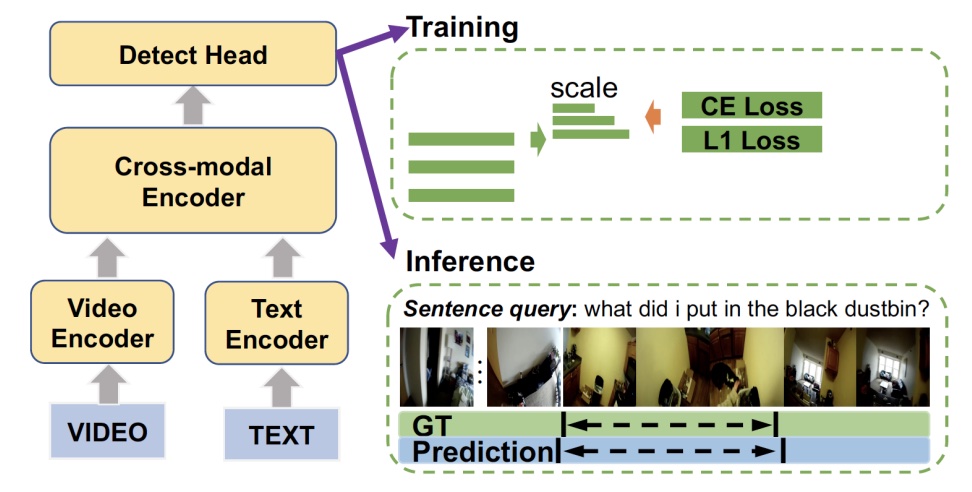

Anchor-Based Detection for Natural Language Localization in Ego-Centric Videos

Published in 2023 IEEE International Conference on Consumer Electronics (ICCE), 2023

Recommended citation: B Liu, S Zheng, J Fu, WH Cheng. "Anchor-Based Detection for Natural Language Localization in Ego-Centric Videos." 2023 IEEE International Conference on Consumer Electronics (ICCE). 01-04. https://ieeexplore.ieee.org/abstract/document/10043460/

The Natural Language Localization (NLL) task aims to localize a sentence in a video with starting and ending timestamps. It requires a comprehensive understanding of both language and videos. We have seen a lot of work conducted for third-person view videos, while the task on ego-centric videos is still under-explored, which is critical for the understanding of increasing ego-centric videos and further facilitating embodied AI tasks. Directly adapting existing methods of NLL to ego-centric video datasets is challenging due to two reasons. Firstly, there is a temporal duration gap between different datasets. Secondly, queries in ego-centric videos usually require a better understanding of more complex and long-term temporal orders. For the above reason, we propose an anchor-based detection model for NLL in ego-centric videos.